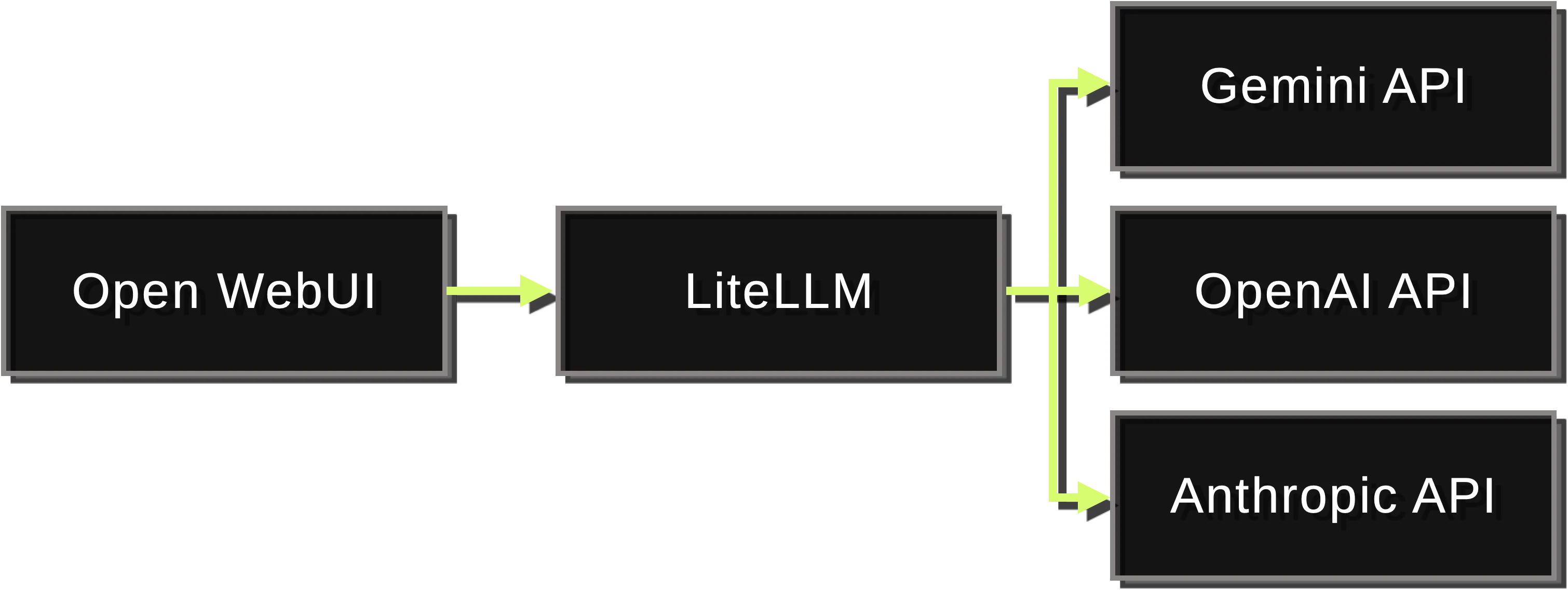

Configuring Open WebUI and LiteLLM with Docker Compose to talk to OpenAI, Anthropic, Gemini, and Perplexity APIs

I want to use a single UI to interact with LLMs from different providers (OpenAI, Anthropic, Google, and Perplexity). I found that there is a way to do this now, but you have to self-host some software.

- Open WebUI — A web-based LLM client that works Ollama and any OpenAI-compatible API.

- LiteLLM — A proxy server that exposes 100s of LLMs with a unified, OpenAI-compatible API.

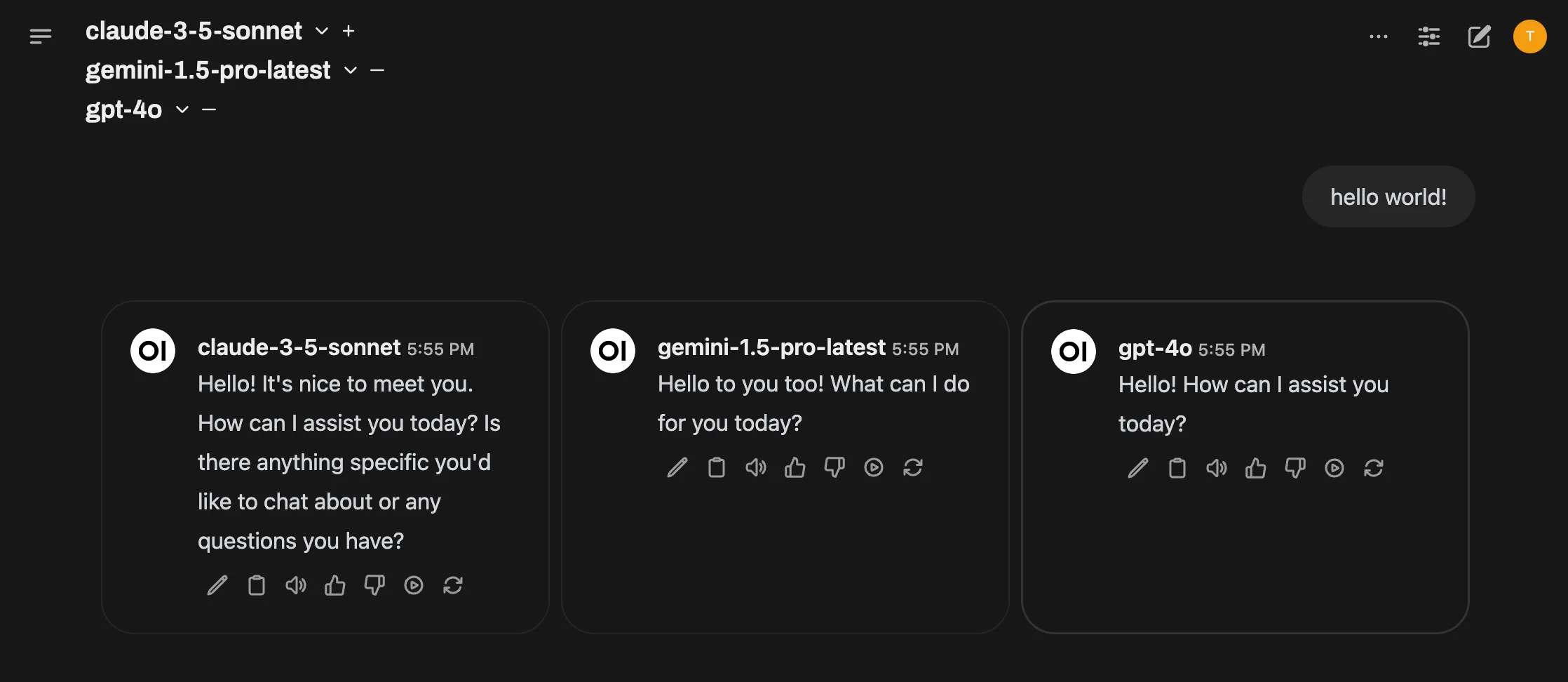

The UI is very flexible. You can choose which model to talk to, or talk to all of them at once:

This is how I set it up:

Set up the environment variables. Put the API keys in a

.envfile.OPENAI_API_KEY= ANTHROPIC_API_KEY= GEMINI_API_KEY= PERPLEXITYAI_API_KEY=Create a LiteLLM config file. Put the model names and API keys in a

litellm_config.yamlfile.model_list: - model_name: gpt-4o litellm_params: model: openai/gpt-4o - model_name: claude-3-5-sonnet litellm_params: model: anthropic/claude-3-5-sonnet-20240620 - model_name: gemini-1.5-pro-latest litellm_params: model: gemini/gemini-1.5-pro-latest safety_settings: - category: HARM_CATEGORY_HARASSMENT threshold: BLOCK_NONE - category: HARM_CATEGORY_HATE_SPEECH threshold: BLOCK_NONE - category: HARM_CATEGORY_SEXUALLY_EXPLICIT threshold: BLOCK_NONE - category: HARM_CATEGORY_DANGEROUS_CONTENT threshold: BLOCK_NONE - model_name: pplx-llama-3.1-sonar-huge litellm_params: model: perplexity/llama-3.1-sonar-huge-128k-onlineCreate a Docker Compose project. Here’s the

docker-compose.ymlfile that puts it all together:services: webui: image: ghcr.io/open-webui/open-webui:main restart: unless-stopped ports: - '127.0.0.1:33371:8080' environment: - OPENAI_API_KEY=dummy - OPENAI_API_BASE_URL=http://litellm:4000/v1 volumes: - open-webui:/app/backend/data litellm: image: ghcr.io/berriai/litellm:main-latest restart: unless-stopped command: - '--config=/litellm_config.yaml' - '--detailed_debug' ports: - '127.0.0.1:33372:4000' environment: - LITELLM_MASTER_KEY=dummy - OPENAI_API_KEY - GEMINI_API_KEY - ANTHROPIC_API_KEY - PERPLEXITYAI_API_KEY volumes: - ./litellm_config.yaml:/litellm_config.yaml volumes: open-webui:Start the project. Run

docker compose up -dto start the project, then go tohttp://localhost:33371/and create an account there.